Discover what deepfakes are, how to find them, and how to detect them using AI-powered tools. We’ll walk you through a practical, engaging tutorial that feels more like detective work than tech jargon and leave you empowered against fake media.

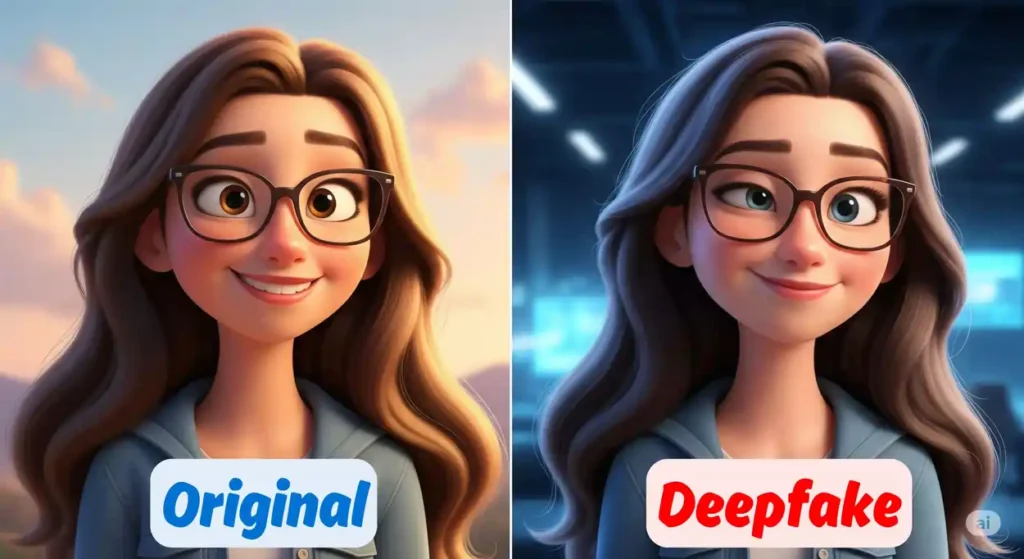

What Is a Deepfake?

Deepfakes are synthetic videos or audio generated by AI often created with GANs (Generative Adversarial Networks) to place someone’s face onto another body or make someone say things they never said. This media can be eerily real, posing huge risks for misinformation, identity fraud, and social manipulation. Using tools to check for deepfakes is now essential. Experts call for vigilance and trust in reputable methods like TrueMedia.org’s platform.

Why Detect Deepfakes Matters

Deepfakes can break trust, damage reputations, and even influence public opinion. In some places, they are illegal when used for fraud or non-consensual imagery. Understanding how to check for deepfakes helps protect individuals and businesses alike. Whether you’re verifying a viral clip or protecting your brand, knowledge is power.

op Free AI-Powered Tools for Deepfake Detection

Here are some of the most reliable tools you can use right away in which many of them are free to try:

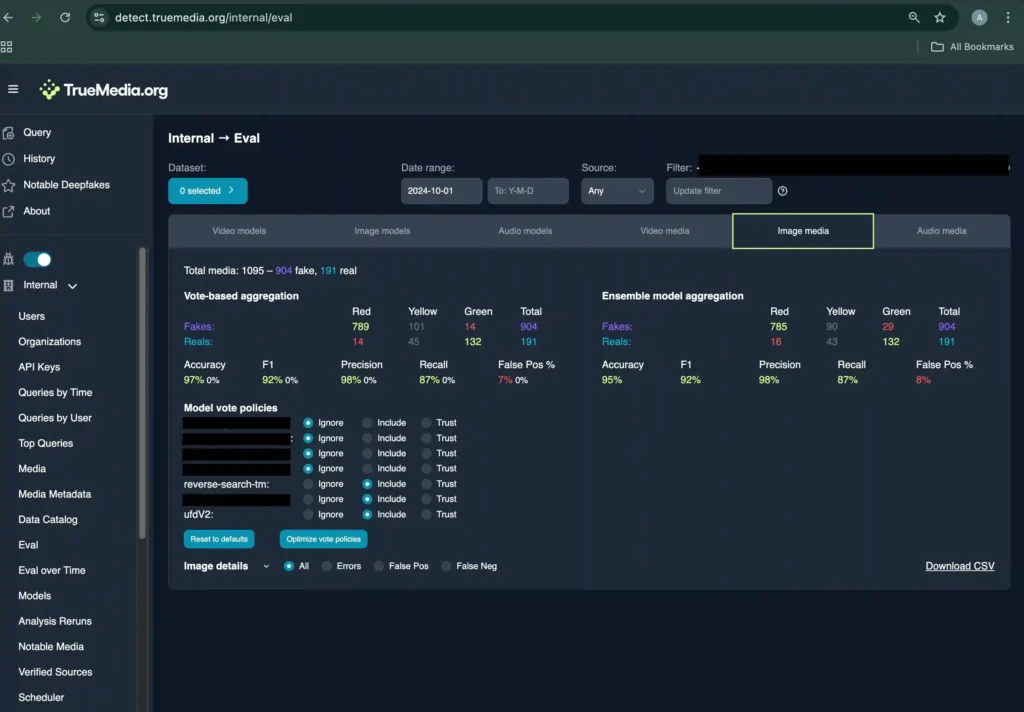

- TrueMedia.org Detection Tool: Offers open access to AI detection tools designed for fact-checkers and journalists. Great for checking content in real time.

- AI Video Detector by CreatorSols: Upload your video or paste a URL, get analysis instantly with confidence scoring. Supports common formats.

- Resemble Detect: Simple, audio-focused detector. Upload audio clips or links to check for synthetic voice alterations.

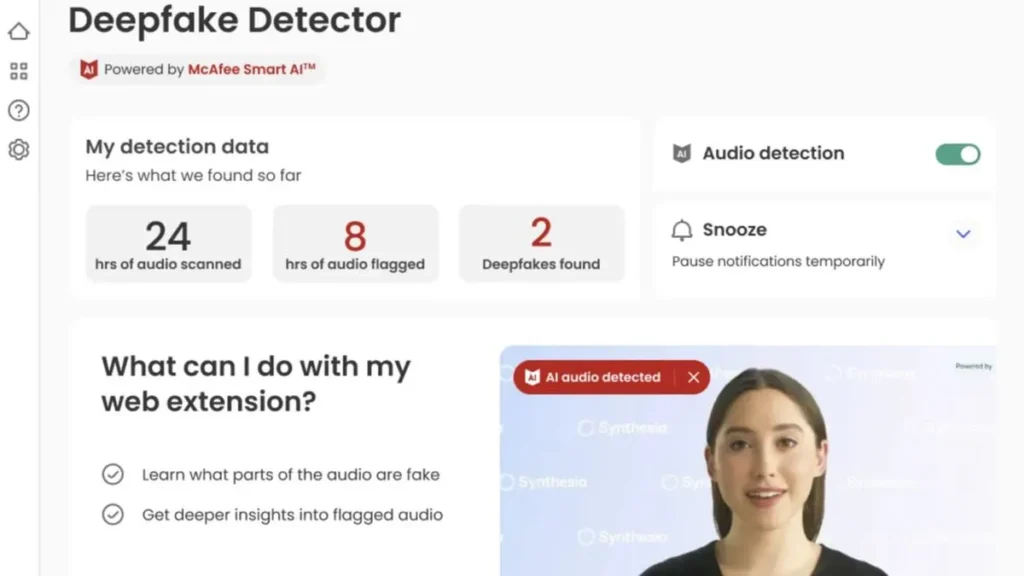

- Deepfake Detector (browser extension): Works on YouTube, TikTok, and Instagram. Scans videos live and flags possible deepfakes inside your browser.

These tools combine machine learning, facial morphology analysis, voice pattern checks, and forensic metadata inspection to build a verdict.

How to Spot a Fake Video Manually

Always trust your eyes first: many deepfakes still fail subtle human realism tests:

- Irregular blinking patterns or mismatched facial muscle movement

- Odd lighting across face edges or inconsistent shadows

- Mouth movement not matching speech sounds (phoneme-viseme mismatch)

- Image artifacts, flickering pixels, or blurring in compressed uploads

This human intuition complements automated tools and it’s featured in guidelines like Supply Chain Attacks: Risks, Examples & Protection Guide to better frame detection strategies in security workflows.

Deepfake Detection Tutorial: Step-by-Step

Here’s how to analyze a video with an online tool:

Step 1: Choose Your Tool & Prepare Content

Select one: TrueMedia online, AI Video Detector, or browser extension. Either upload the video file (less than 100MB) or paste a URL if it’s on YouTube or TikTok. Higher resolution clips give more accurate outcomes.

Step 2: Let the Algorithm Analyze

Each tool shows a timeline or confidence report. Green means authentic, red flags methodically explained. Some interfaces (like TrueMedia) present heatmaps and layered AI model outputs so investigators can weigh evidence. They often differ per model row.

Step 3: Review the Report Carefully

Look for:

- Probability scores (e.g. 95% real vs 5% fake)

- Anomalies noted in blinking or facial landmarks

- Audio inconsistencies in signal analysis

That rich visual breakdown helps confirm if it’s genuine or fake. Tools like Resemble Detect add metadata and voice rhythm analysis for audio-specific detection.

Step 4: Cross-Check with Manual Signs

Compare the tool’s conclusion with your own visual cues. If the tool indicates high fake probability and you also spot blinking or mouth-sync flaws, your confidence in the result grows.

Example Walkthrough (Screenshots Overview)

Above are screenshots from two sample detection tools: one shows real-time heatmap output and probability bars, another scans social media videos in-browser. Such visual interfaces make deepfake detection approachable for non-technical users.

Are Deepfakes Illegal?

Creating or distributing non-consensual deepfake content such as fake pornography or political misinformation, these can be illegal in many regions. Enforcement is growing. If you’re concerned about legal or ethical use, consult local laws or reliable reporting tools. Are deepfakes illegal? The answer varies by jurisdiction, but always err on caution.

Tips: What to Do, What to Avoid, and Top Secret Tricks

If you’re interested in protecting your digital projects or building secure backend systems, those same detection skills apply. Check out How to Make Your Own VPN Using Raspberry Pi 5 for secure remote browsing. for building secure verification tools.

Do:

Use at least two detection tools to confirm suspicious media. Combine tool output with your own visual review. Keep source videos at highest quality.

Avoid:

Blindly trusting one tool without analysis. Testing low-res or heavily compressed clips where accuracy drops fast. Relying on a single sign, like voice change, without correlative evidence.

Top secret tip:

Download metadata from the video (Exif info, creation timestamps) using media tools. Compare it to claimed publishing dates, well mismatch is a red flag. Advanced users can set up open-source models (like DeepFake-O-Meter v2.0 on GitHub) for custom validation.

Why Deepfake Detection Matters Beyond Personal Use

In a world where misinformation can swing elections or destroy reputations, knowing how to detect deepfakes is vital for students, journalists, and professionals alike. It fits into larger digital trust landscapes much like 10 Preventive Cybersecurity Measures You Must Know in 2025, helping ensure online media integrity. Think of detection as a digital literacy skill like verifying sources in research or backing up your own data.

Final Takeaway

How to detect deepfakes using AI is no longer optional, it’s essential. When a tool shows a high fake probability and you see visual inconsistencies, treat the media with caution. Follow a method: evaluate, analyze, confirm with a second tool, then decide.

By using free tools like TrueMedia.org, AI Video Detector, and browser plug-ins, anyone can become the first line of defense against fake content. Combine that with manual checks and metadata comparison for high confidence.

Stand up for truth. Learn detection techniques. Spread awareness. And always question before sharing. Because in the age of synthetic media, critical thinking is your best protection.

Deepfakes are AI-generated videos or audio that mimic real people, often used to spread misinformation or defame someone. Knowing how to detect deepfakes helps verify content, protect reputations, and prevent fraud—especially in social media, news, or financial contexts.

Reliable tools include TrueMedia.org, AI Video Detector by CreatorSols, Sensity AI, WeVerify (browser extension), and Vastav.AI. These offer heatmaps, probability scores, metadata analysis, and audio-video consistency checks to reveal manipulated media.

Upload the video to an AI detector to get a confidence score and visual anomalies. Then check for human clues: blinking frequency, mismatched lip-syncing, odd shadows. Combining both methods improves accuracy and helps reveal even subtle edits.

Laws vary: Denmark and some U.S. states now ban non-consensual deepfake distribution; Japan criminalizes intimate deepfake content. Broad legal frameworks—including the U.S. TAKE IT DOWN Act—address privacy, defamation, and fraud related to deepfakes.

They use enterprise-grade tools like Reality Defender, Sensity, and Hive AI. These support multi-format deepfake detection, real-time APIs, and explainable reports—ideal for fact-checking, media verification, and fraud prevention workflows. Venersibly accurate tools for high-stakes needs.